The block size limit is a consensus rule. If a block is larger than this limit, miners won’t incorporate it into the chain they are building. If a miner produces a block that isn’t incorporated into the chain by a majority of miners, it will be orphaned and the miner that produced the block will receive no compensation for their work. While Bitcoin could work without a preset fixed limit, that would leave a lot of uncertainty for miners. It is useful for miners to know the limit that is observed by a majority of the mining power and that we have a clear and simple consensus rule for it.

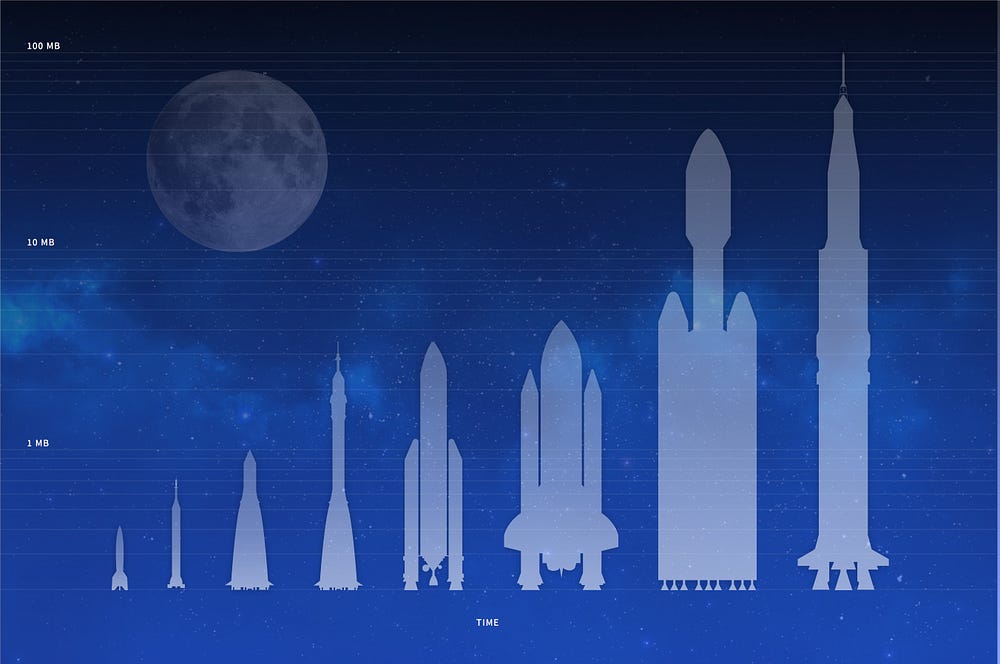

A fixed block size limit, as Bitcoin currently has at 1mb, doesn’t allow transaction throughput to adjust with user demand and scalability enhancements. Today we see the volume of transactions routinely bumping up against this artificial limit. It would be straightforward to simply increase this fixed limit, but we would find ourselves having this debate about raising the limit again in a few months. This debate is damaging enough as it is. To drag it out another year or two could prove to be devastating to Bitcoin.

A rising, but still fixed, block size limit is also problematic. What if technology advances don’t keep pace with the increasing limits? We could find ourselves in a situation where the block size limit is so far above the market that it’s practically irrelevant. You may as well not have a limit. Or, alternatively, the limit might be well below the market and technological constraints and a similar debate about raising the limit would rage.

There have been a number of proposals put forth. BIP101 would increase the block size steadily over time according to a fixed function. It was opposed by a number of miners because they felt it was too aggressive. If techology doesn’t keep pace with BIP101, it would leave miners with a lot of uncertainty regarding the real, practical limit. BIP100 was viewed more favorably by miners, but it is a more complex code change. It also requires miners to be pro-active in communicating their preference for the block size limit. Conceptually, using the block chain to establish a consensus regarding the block size (as BIP100 does) is an excellent idea. After all, that’s how mining difficulty, an adaptive control, is established.

Miners need a simple, but adaptive consensus rule for determining the block size limit.

Of all the ideas we’ve examined, the one that seems most appealing is a simple adaptive limit based on a recent median block size. To determine the block size limit, you compute the median block size over some recent sample of blocks and apply a multiple. For example, you might set the limit to 2x the median block size of the last 2016 blocks. It’s worth noting that Ethereum employs a similar approach (though it uses an exponentially weighted moving average rather than a simple median). There have been a number of other so-called “flexcap” proposals that we think have needless complexity.

In addition to a hard limit there would be a configurable soft limit that would govern the size of blocks produced by a miner. This soft limit would also be a multiple of the median size of a recent sample of blocks. The soft limit would be easily configurable so that a miner could exert some influence over the future direction of the block size limit. A reasonable default between 1 and the hard limit multiple would be chosen (i.e. 1.5). A miner that would like to see the hard limit increased would set their multiple higher. A miner that wanted to see the hard limit decreased would set their multiple lower (below 1). The formulas are summarized below (where n is the number of recent blocks over which to compute the median):

limit = m * median(n) soft_limit = sm * median(n)

The values for m and n are consensus rules while the value of sm is a configuration setting with a reasonable default. Algorithmic details like rounding behavior or whether to adjust the limit with every block, or only every n blocks would also be a matter of consensus. And the current 1mb limit would serve as a lower bound for the hard limit.

The choice to use the median, rather than the average, prevents miners from gaming the rule by producing blocks that are artificially inflated with their own transactions. Or, conversely, producing empty blocks. To game the median, you would need coordinated action by more than 50% of the mining capacity. Of course, if someone controlled more than 50% of the mining capacity, Bitcoin has bigger issues to contend with than the block size limit.

With this adaptive rule for a block size limit, the throughput of the Bitcoin network can increase to meet the demands of its users, while remaining constrained by current scaling limitations. As miners produce larger blocks, they’ll experience higher orphan rates. As orphan rates rise, they will moderate the size of their blocks and be more selective about the transactions they include. They’ll also pay people to improve Bitcoin’s scalability.